Products

Scale RapidThe fastest way to production-quality labels.

Scale StudioLabeling infrastructure for your workforce.

Scale 3D Sensor FusionAdvanced annotations for LiDAR + RADAR data.

Scale ImageComprehensive annotations for images.

Scale VideoScalable annotations for video data.

Scale TextSophisticated annotations for text-based data.

Scale AudioAudio Annotation and Speech Annotation for NLP.

Scale MappingThe flexible solution to develop your own maps.

Scale NucleusThe mission control for your data

Scale ValidateCompare and understand your models

Scale LaunchShip and track your models in production

Scale Document AITemplate-free ML document processing

Scale Content UnderstandingManage content for better user experiences

Scale SyntheticGenerate synthetic data

Solutions

Resources

Company

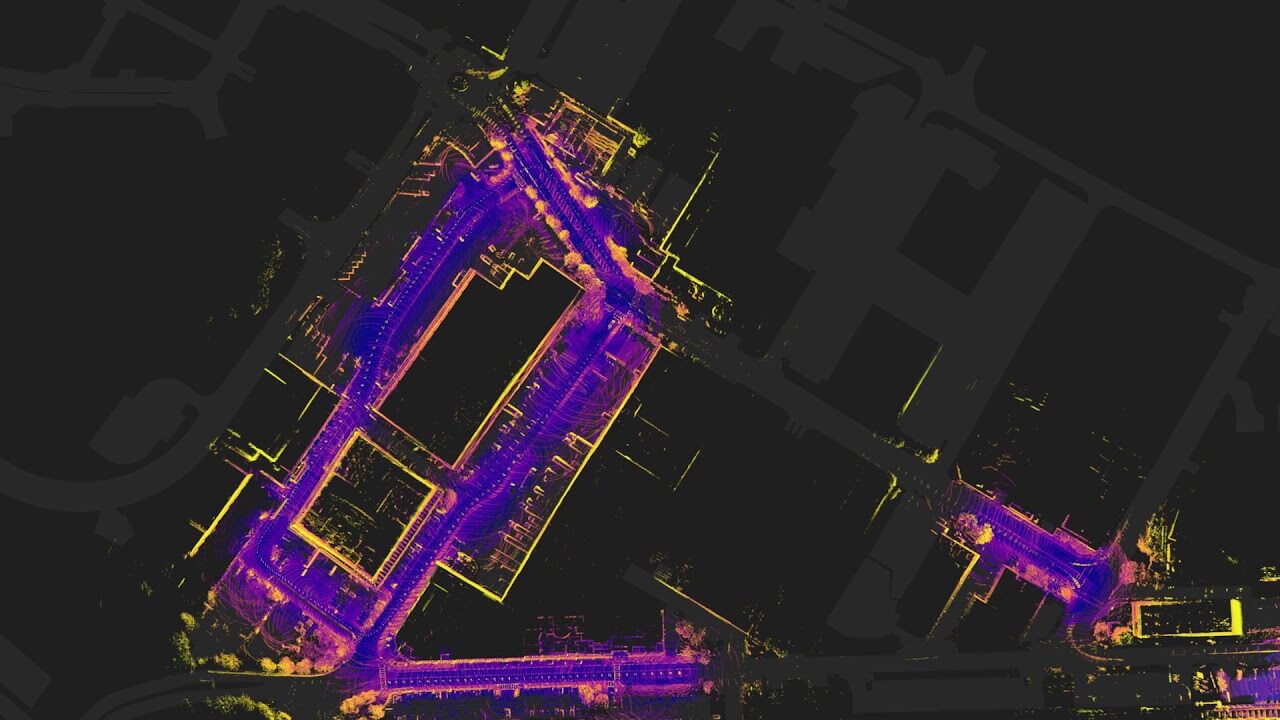

nuScenes by Aptiv

Large-scale open source dataset for autonomous driving.

- Scene 1

- Scene 2

- Scene 3

- Scene 4

- Scene 5

- Scene 6

- Scene 7

- Scene 8

- Scene 9

- Scene 10

- Scene 11

- Scene 12

- Scene 13

- Scene 14

- Scene 15

- Scene 16

- Scene 17

- Scene 18

- Scene 19

- Scene 20

- Scene 21

- Scene 22

- Scene 23

- Scene 24

- Scene 25

- Scene 26

- Scene 27

- Scene 28

- Scene 29

- Scene 30

- Scene 31

- Scene 32

- Scene 33

- Scene 34

- Scene 35

- Scene 36

- Scene 37

- Scene 38

- Scene 39

- Scene 40

- Scene 41

- Scene 42

- Scene 43

- Scene 44

- Scene 45

- Scene 46

- Scene 47

- Scene 48

- Scene 49

- Scene 50

Overview

Support for computer vision and autonomous driving research

Data Collection

Careful scene planning by Aptiv

Car Setup

Vehicle, Sensor and Camera Details

- 6

- 12Hz capture frequency

- 1/1.8'' CMOS sensor of 1600x1200 resolution

- Bayer8 format for 1 byte per pixel encoding

- 1600x900 ROI is cropped from the original resolution to reduce processing and transmission bandwidth

- Auto exposure with exposure time limited to the maximum of 20 ms

- Images are unpacked to BGR format and compressed to JPEG

- See camera orientation and overlap in the figure below.

Cameras - 1

- 20Hz capture frequency

- 32 channels

- 360° Horizontal FOV, +10° to -30° Vertical FOV

- 80m-100m Range, Usable returns up to 70 meters, ± 2 cm accuracy

- Up to ~1.39 Million Points per Second

Spinning LiDAR - 5

- 77GHz

- 13Hz capture frequency

- Independently measures distance and velocity in one cycle using Frequency Modulated Continuous Wave

- Up to 250m distance

- Velocity accuracy of ±0.1 km/h

Long Range RADAR Sensor

Flythrough of the Nuscenes Teaser

Sensor Calibration

Data alignment between sensors and cameras

LiDAR extrinsics

Camera extrinsics

Camera intrinsic calibration

IMU extrinsics

Sensor Synchronization

In order to achieve cross-modality data alignment between the LiDAR and the cameras, the exposure on each camera was triggered when the top LiDAR sweeps across the center of the camera’s FOV. This method was selected as it generally yields good data alignment. Note that the cameras run at 12Hz while the LiDAR runs at 20Hz.

The 12 camera exposures are spread as evenly as possible across the 20 LiDAR scans, so not all LiDAR scans have a correspondingcamera frame.

Reducing the frame rate of the cameras to 12Hz helps to reduce the compute, bandwidth and storage requirement of the perception system.

Data Annotation

Complex Label Taxonomy

Instances Per Label

vehicle.car

human.pedestrian.adult

movable_object.barrier

movable_object.trafficcone

vehicle.truck

vehicle.trailer

vehicle.construction

vehicle.bus.rigid

Get Started with Nuscenes

Ready to get started with nuScenes?

This tutorial will give you an overview of the dataset without the need to download it.

Please note that this page is a rendered version of a Jupyter Notebook.

Take the Tutorial