In order to launch a production batch you need:

- A calibrated project (See more about Calibration Batches)

- Quality tasks, of which there are two kinds:

- Training tasks: A subset of audited tasks that Taskers will complete before attempting live tasks from your production batch. These tasks make up the training course that all Taskers must complete (while meeting a certain quality bar) in order to onboard onto your project.

- Evaluation tasks: A subset of audited tasks that will help track quality of the Taskers. These are tasks that we serve to Taskers after they’ve onboarded onto your project. To the Tasker, it appears as any other task on the project. However, since we already know what the correct labels are, we are able to evaluate how well they performed on the task. This enables us to ensure that Taskers continue to perform at a high quality bar over the entire course of time that they’re working on the project. Taskers who drop below the quality threshold will be automatically taken off the project.

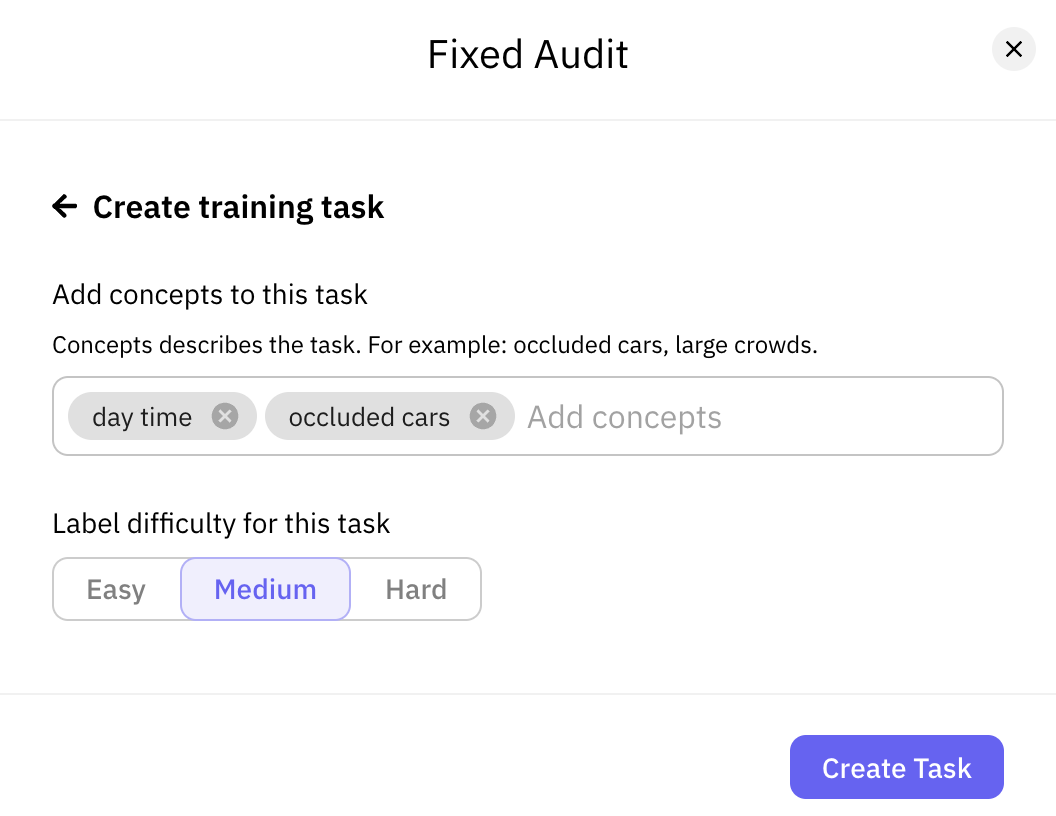

You also have the ability to add concepts and a difficulty to each quality task. Concepts describe what the evaluation task is about, whereas difficulty describes how difficult the task is to complete. Tagging quality tasks with concepts and difficulties allows us to serve them in a more balanced way to Taskers, obtaining more holistic quality signals on production batches.

Quality Tasks: Training vs. Evaluation

In order to ensure quality of your labels, you'll need to decide on subsets of Training tasks and Evaluation tasks.

If you think the task would be a good one for all Taskers to complete before moving on to the live Production Batch tasks, it would make sense to make the task a Training task. Remember to think about your Training tasks as a set - make sure they cover a good breadth of the data variability of your dataset. These tasks should generally be easier, as it will be the first time a Tasker encounters your data.

If you think the task would be good one to track in terms of measuring quality of your Production Batch tasks, it would make sense to make the task an Evaluation task. These tasks should generally be harder, since they will be randomly served to Taskers to gauge quality and accuracy. Note that since they tend to be harder, your general Production Batch quality should be higher than your Evaluation task quality.

Creating Quality Tasks

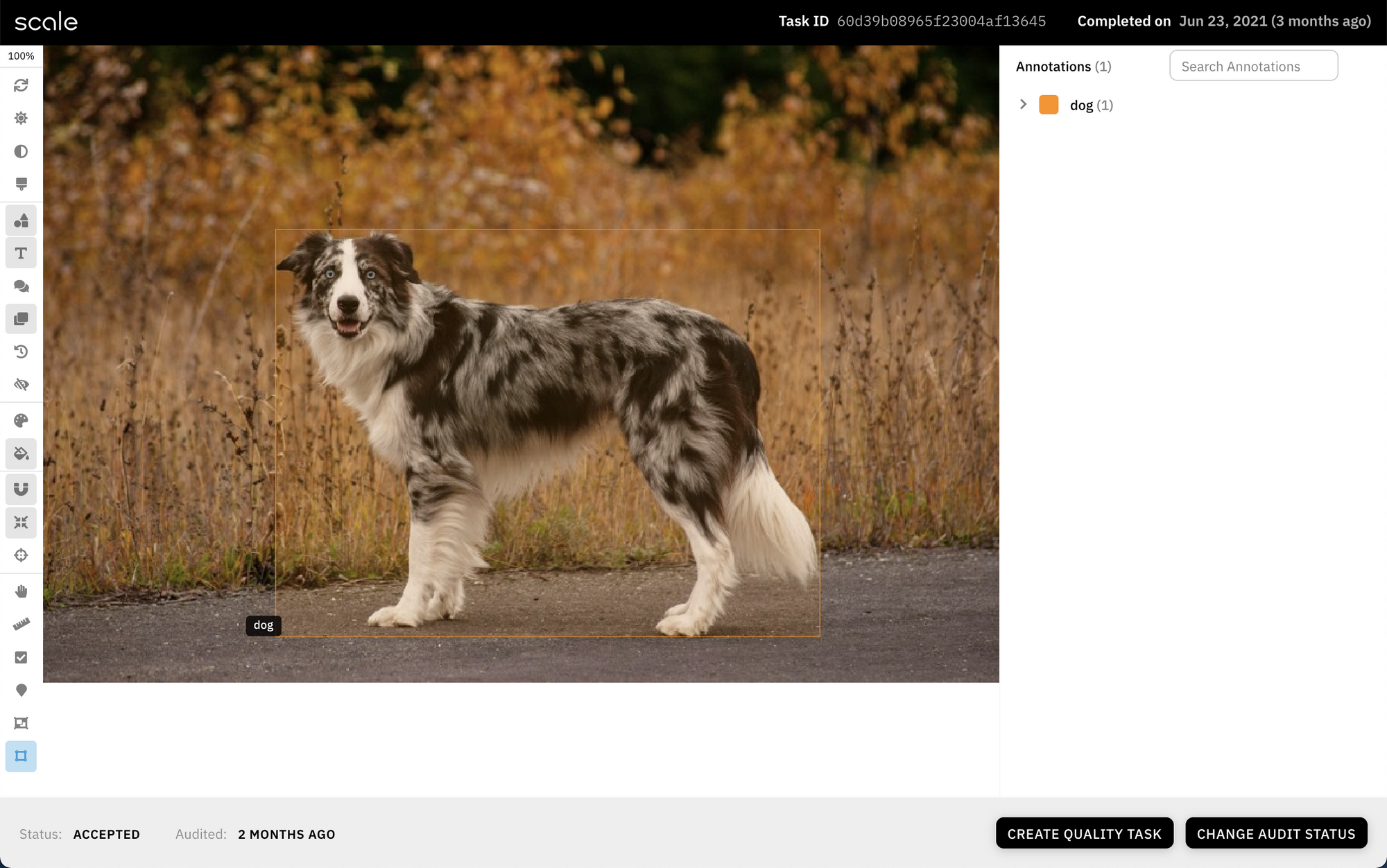

You can create a quality task from any audited task. For instance, you can take your Calibration Batch and after you audit each task, you can choose to make a quality task out of it.

It is important that you create a diverse set of quality tasks. For example, for a 3 class categorization problem, you would want an equal balance between all 3 classes.

Selecting Create Quality Task in the lower right corner will prompt you to choose the type

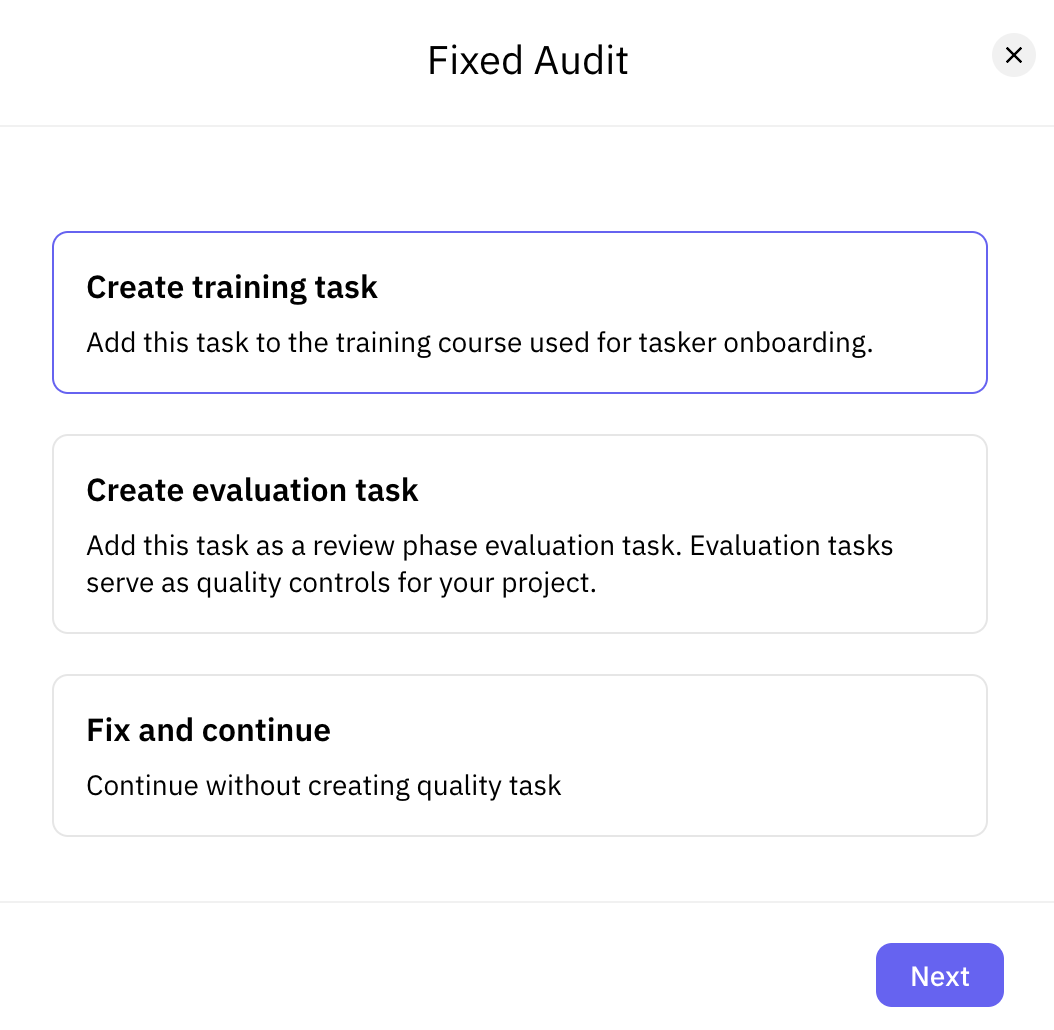

You can decide which type of task it should be.

You can then label the quality task with concepts and a difficulty.

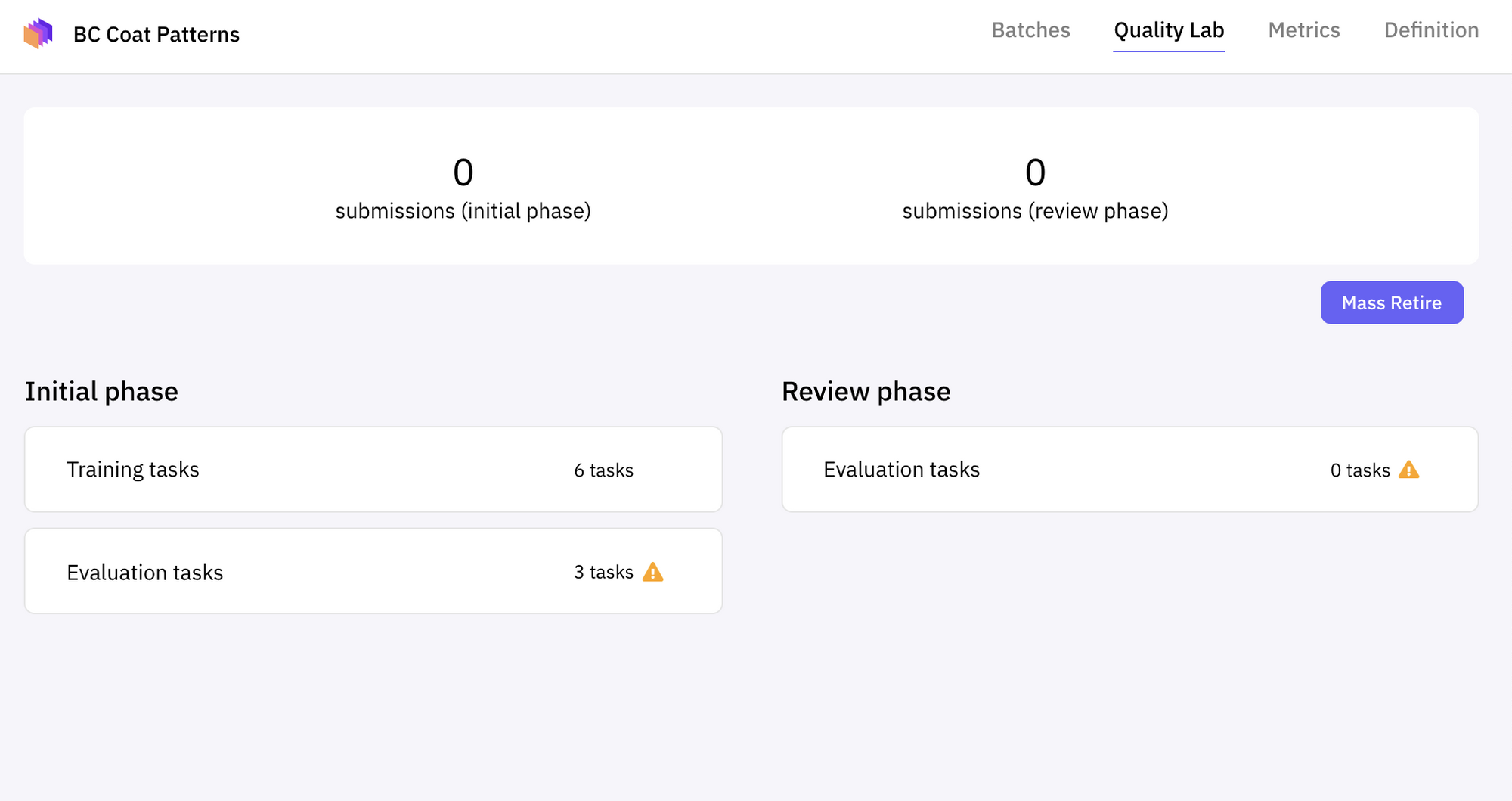

All the quality tasks you've created (both Training & Evaluation Tasks) can be found under Quality Lab in the upper navigation of each project.

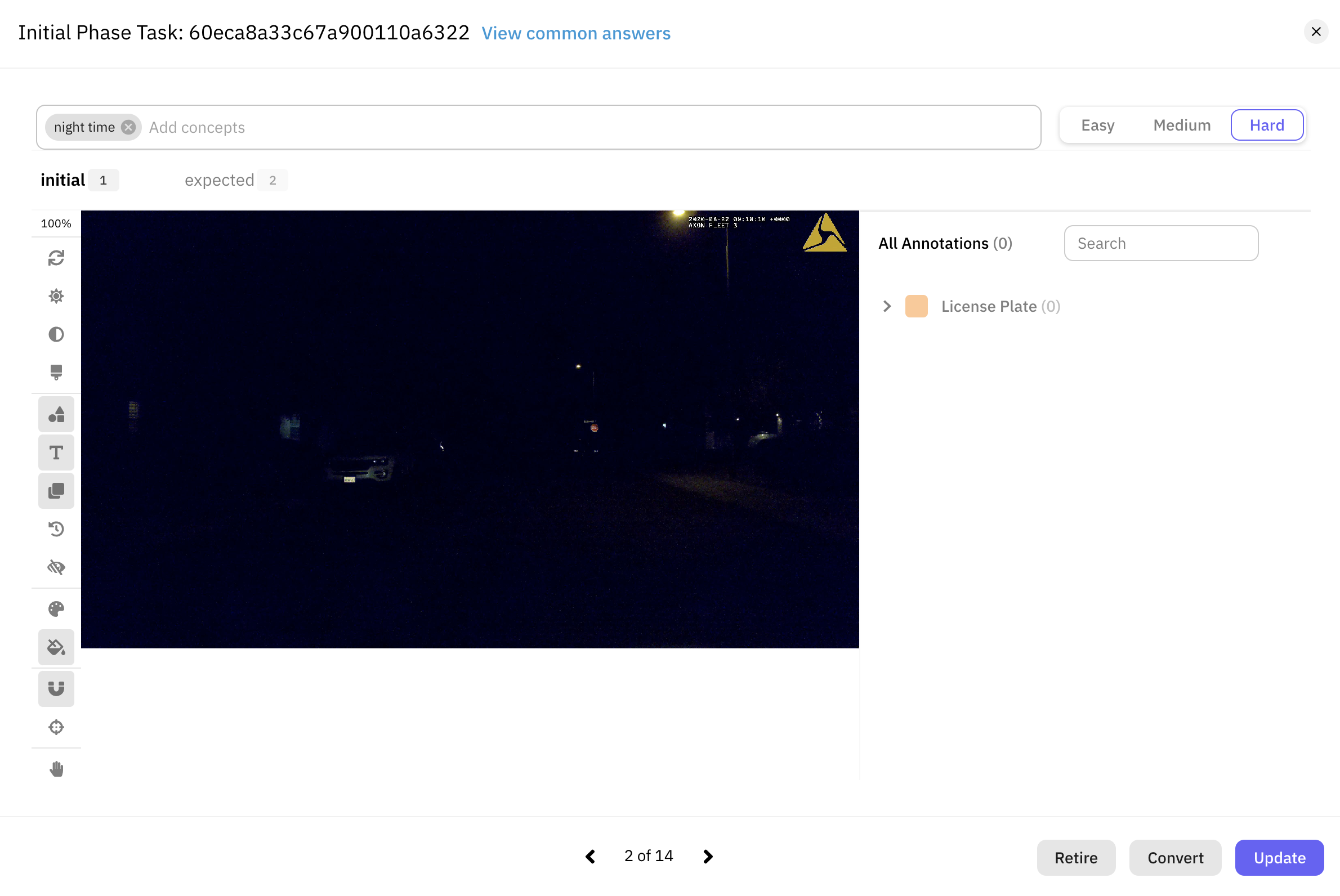

You can click on an evaluation task to show its corresponding concepts and difficulty. For example, the following image tests the ability to find license plates in a picture taken during the night.

This evaluation task has been tagged with the "night time" concept and has been assigned a difficulty of "Hard."

Evaluation Tasks are automatically split into initial and review based on the changes you made in the audit. If you had Rejected and then made appropriate corrections to the attempted annotation, that Evaluation Task becomes a Review Phase Evaluation task.

- Initial Phase Evaluation Tasks measure a Tasker’s ability to complete an annotation task from start to finish.

- Review Phase Evaluation Tasks measure a Tasker’s ability to take the completed work from another Tasker, and make corrections as needed.

Recommendations for Quality Tasks

It is recommended that you create:

At the start of a project (before launching production)

- At least 5 training tasks

- At least 30 evaluation tasks

Ongoing delivery

- Refreshing evaluation tasks on a weekly basis to ensure labelers don't 'learn' which tasks are evaluative. More evaluation tasks = better

- Additionally, you should always create new training tasks if you update the instructions / modify a rule to ensure that labelers are kept up-to-date. If you do change the instructions, please make sure to replace any quality lab tasks which test labelers on the old rules with quality lab tasks that assess labelers on the new rules.

- Monitor evaluation task average accuracy results: if the evaluation scores are too high, that might signal your evaluation tasks are too easy. On the other hand, if the average accuracy scores are very low, that might mean there is an error in your initial and/or expected responses. We suggest periodically reviewing the quality lab scores and double-checking the initial and expected responses to ensure everything is set up correctly.

Once you have determined that your quality tasks subsets represent your full dataset well and you have checked that all of your initial and expected responses are correct, you're ready to launch your Production Batch!

You've made it!

After creating Quality Tasks, your project is ready to start creating Regular Batches. These are batches hat make up the bulk of the data you want labelled. You can use batch names as a metadata to help group your data. We usually recommend up to 5000 tasks per batch. Once you create your first Regular Batch, Scale Rapid will automatically start onboarding labelers onto your project.

After launching a Production Batch, you can continue to add data and refine your project for future Production Batches.